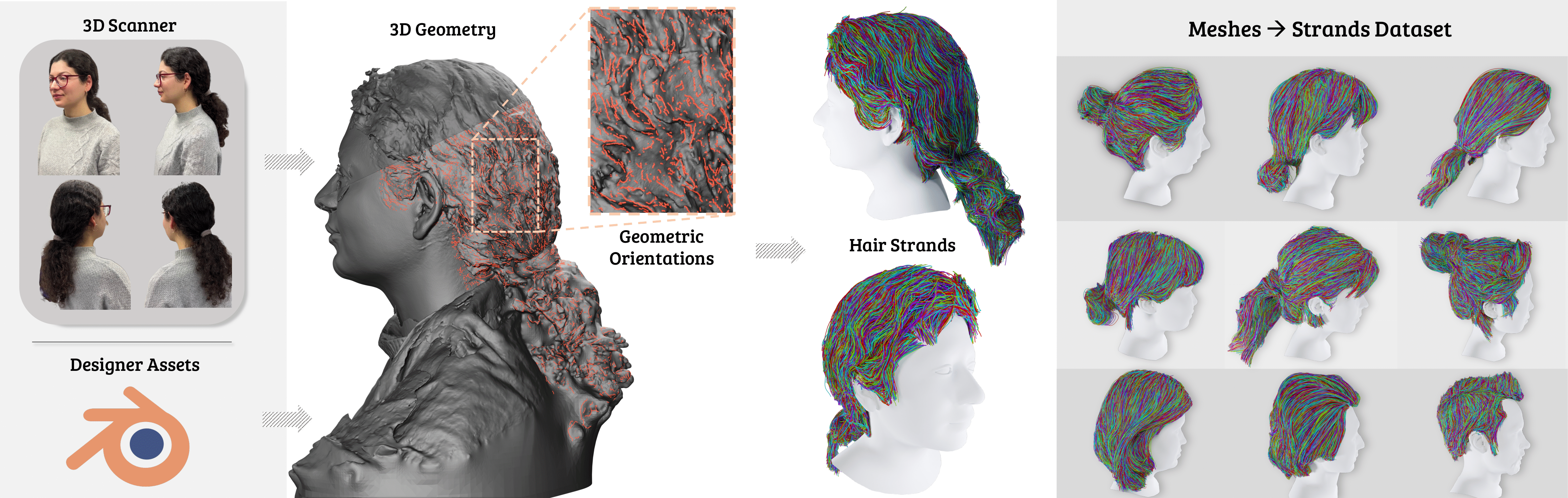

We propose a novel method that reconstructs hair strands directly from colorless 3D scans by leveraging multi-modal hair orientation extraction. Hair strand reconstruction is a fundamental problem in computer vision and graphics that can be used for high-fidelity digital avatar synthesis, animation, and AR/VR applications. However, accurately recovering hair strands from raw scan data remains challenging due to human hair's complex and fine-grained structure. Existing methods typically rely on RGB captures, which can be sensitive to the environment and can be a challenging domain for extracting the orientation of guiding strands, especially in the case of challenging hairstyles. To reconstruct the hair purely from the observed geometry, our method finds sharp surface features directly on the scan and estimates strand orientation through a neural 2D line detector applied to the renderings of scan shading. Additionally, we incorporate a diffusion prior trained on a diverse set of synthetic hair scans, refined with an improved noise schedule, and adapted to the reconstructed contents via a scan-specific text prompt. We demonstrate that this combination of supervision signals enables accurate reconstruction of both simple and intricate hairstyles without relying on color information. To facilitate further research, we introduce Strands400, the largest publicly available dataset of hair strands with detailed surface geometry extracted from real-world data, which contains reconstructed hair strands from the scans of 400 subjects.

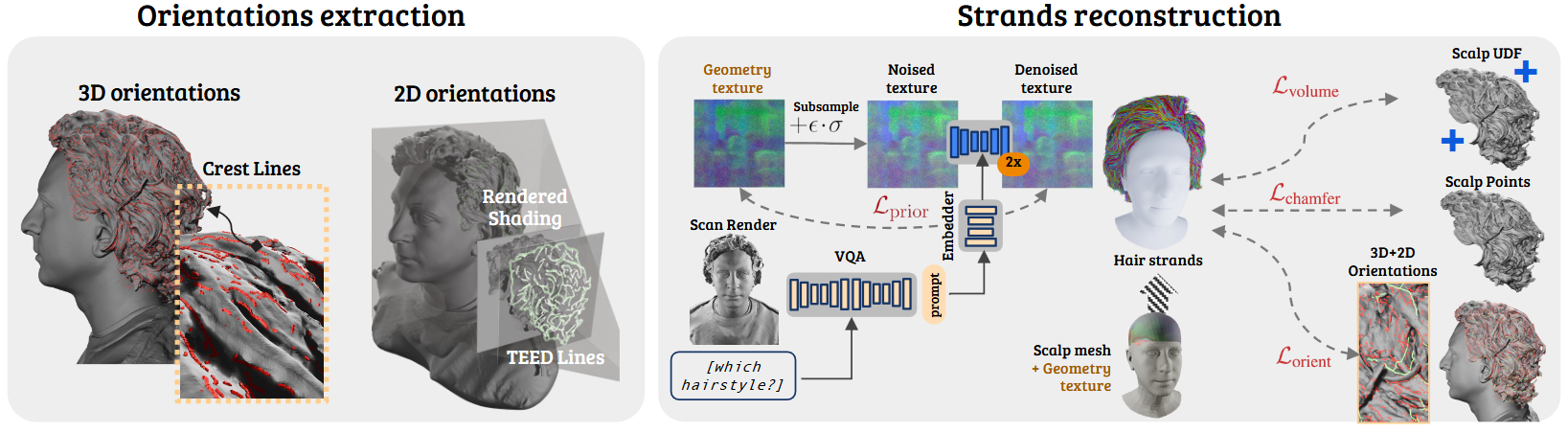

GeomHair consists of two main stages: orientations extraction (left) and strands reconstruction (right). In the orientation extraction stage, we extract complementary orientation signals by combining 3D orientations from crest lines with 2D orientations obtained from TEED features applied to rendered shading of the scans. During reconstruction, we optimize a geometry texture to generate hair strands while enforcing multiple constraints: the orientation loss (Lorient) ensures strand growth aligns with our extracted 3D+2D orientation field, volume loss (Lvolume) keeps strands near the surface, and Chamfer distance (Lchamfer) promotes uniform coverage of the hair volume. To enhance realism, we additionally incorporate a diffusion prior (Lprior) conditioned on hairstyle descriptions generated by a VQA model analyzing the input scan.

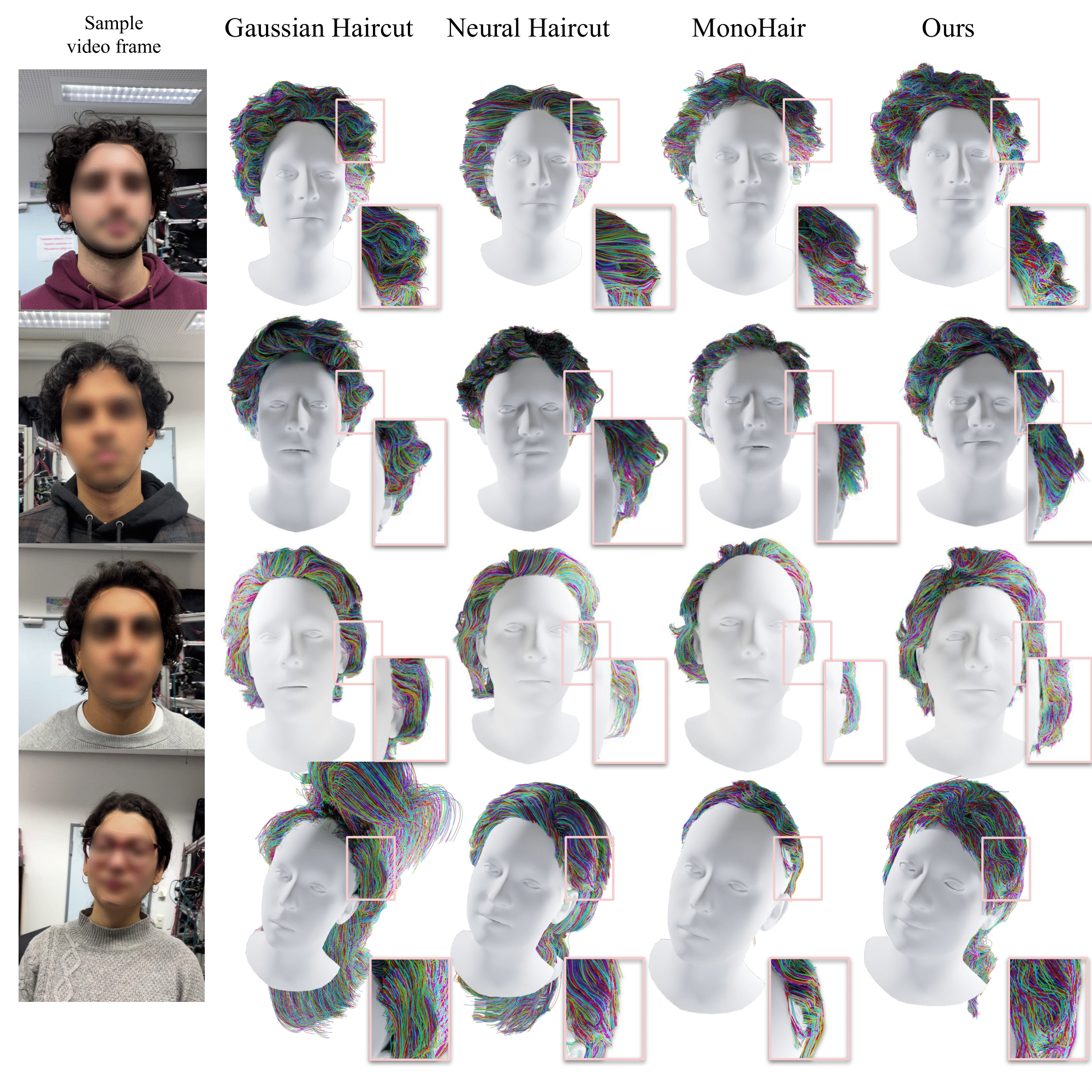

We compare our GeomHair method against state-of-the-art approaches — NeuralHaircut, Monohair, and GaussianHaircut. Unlike these methods, which require multi-view RGB images, GeomHair reconstructs hairstyles purely from 3D scans.

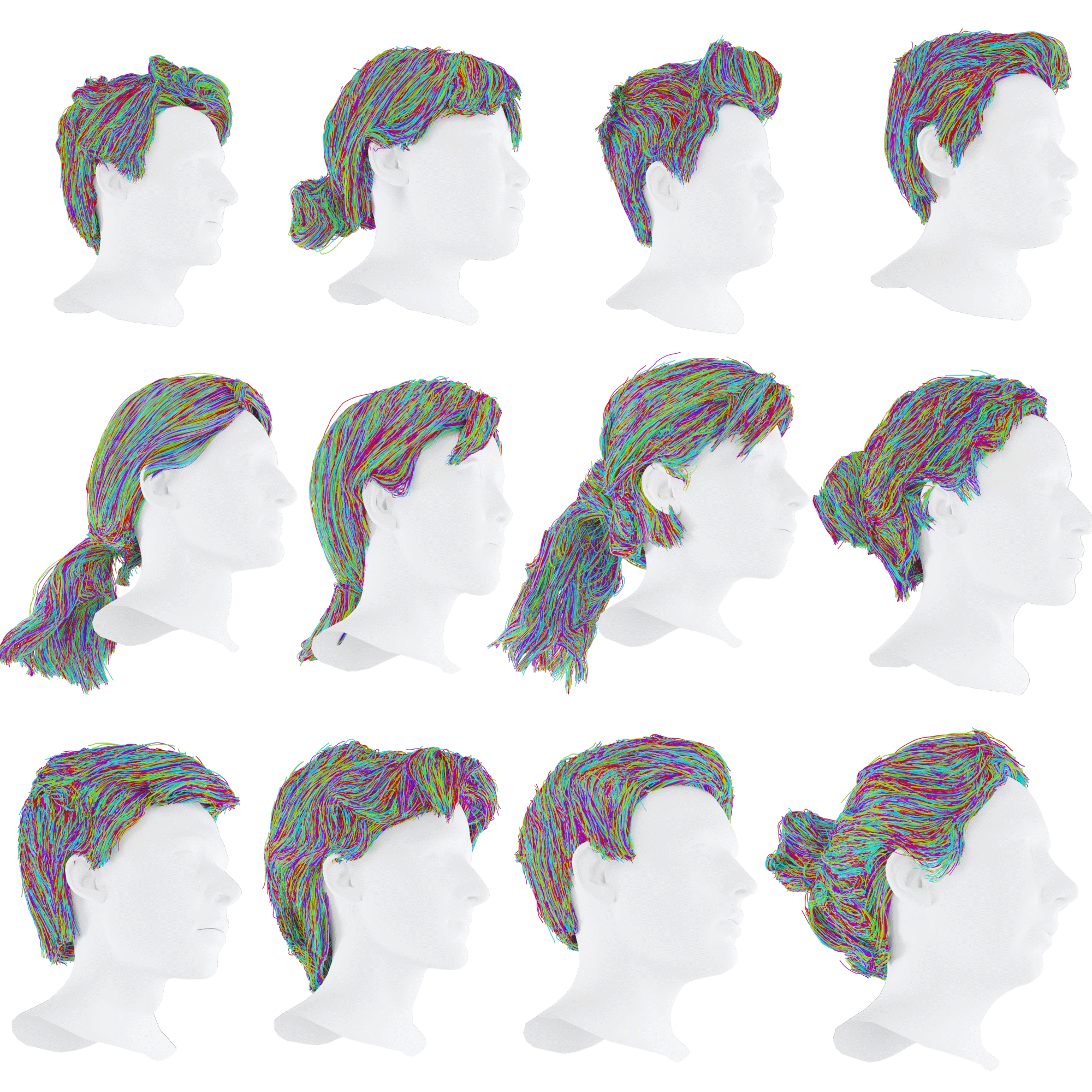

We assembled a dataset of 400 high-resolution scans and applied GeomHair to each. Below, we show sampled strand reconstructions from the Strands400 dataset. The dataset is to be released soon — stay tuned.

@article{lazuardi2025geomhair,

title={GeomHair: Reconstruction of Hair Strands from Colorless 3D Scans},

author={Lazuardi, Rachmadio Noval and Sevastopolsky, Artem and Zakharov, Egor and Niessner, Matthias and Sklyarova, Vanessa},

journal={arXiv preprint arXiv:2505.05376},

year={2025}

}